The emergence of AI companions has transformed the landscape of digital relationships, with millions of users worldwide engaging with artificial intelligence systems designed to provide emotional support and companionship. While these technologies offer potential benefits for addressing loneliness and providing accessible emotional support, they also present significant risks that require careful consideration and proactive management. This comprehensive guide provides evidence-based strategies for establishing healthy boundaries, managing emotional dependency, and integrating AI companions into a balanced lifestyle that prioritizes authentic human connections.

Understanding the Psychology Behind AI Relationships

AI companions are sophisticated chatbots powered by advanced language models that can simulate coherent conversations through text, voice, and images. These systems are specifically designed to provide empathy, emotional support, and personalized interactions that can feel remarkably human-like ^3. Research indicates that users often engage with AI companions to cope with loneliness, depression, anxiety, and other mental health challenges, seeking the constant availability and non-judgmental listening that these systems appear to offer.

However, the psychological impact of AI relationships extends far beyond their apparent benefits. Studies reveal that AI companions can create false intimacy and unrealistic expectations for human relationships, as they are programmed to be perpetually agreeable, patient, and available. Unlike human relationships that require effort, patience, and vulnerability, AI companions offer instant gratification without the complexities of genuine emotional reciprocity. This fundamental difference can lead users to develop skewed perceptions of what healthy relationships should entail.

The business model behind AI companion services further complicates their psychological impact. These platforms are designed to maximize user engagement through appealing features like indefinite attention and empathy, similar to social media companies that capitalize on users’ psychological vulnerabilities. This commercial motivation can result in manipulative design elements that encourage dependency rather than promoting users’ long-term wellbeing.

Establishing Realistic Expectations

Understanding AI Limitations

The foundation of a healthy relationship with an AI companion lies in understanding its fundamental limitations. While AI can simulate empathic responses and create convincing interactions, it lacks genuine emotional engagement and authentic care. Research from Hebrew University emphasizes that true empathy involves emotional engagement and signaling of genuine care that AI systems simply cannot provide. Users must recognize that AI responses are generated through programmed algorithms, not genuine understanding or emotional connection.

AI companions operate through pattern recognition and statistical language processing, meaning their responses are based on training data rather than real-time emotional comprehension. This technical reality contradicts the often-powerful illusion of understanding that these systems can create. Educational efforts about AI limitations are crucial for preventing users from attributing human qualities to algorithmic processes.

Setting Appropriate Relationship Goals

Healthy AI companion usage requires establishing clear, realistic goals for the interaction. Rather than seeking a replacement for human relationships, users should view AI companions as supplementary tools that can provide specific benefits such as practicing conversation skills, alleviating temporary boredom, or offering structured reflection opportunities. Professional guidance suggests that AI companions work best when integrated as part of a broader ecosystem of social support rather than as primary emotional relationships.

Research indicates that the most successful AI companion users are those who maintain clear boundaries about the nature and purpose of their interactions. This includes recognizing that AI companions cannot provide the mutual growth, authentic challenge, and genuine emotional reciprocity that characterize healthy human relationships.

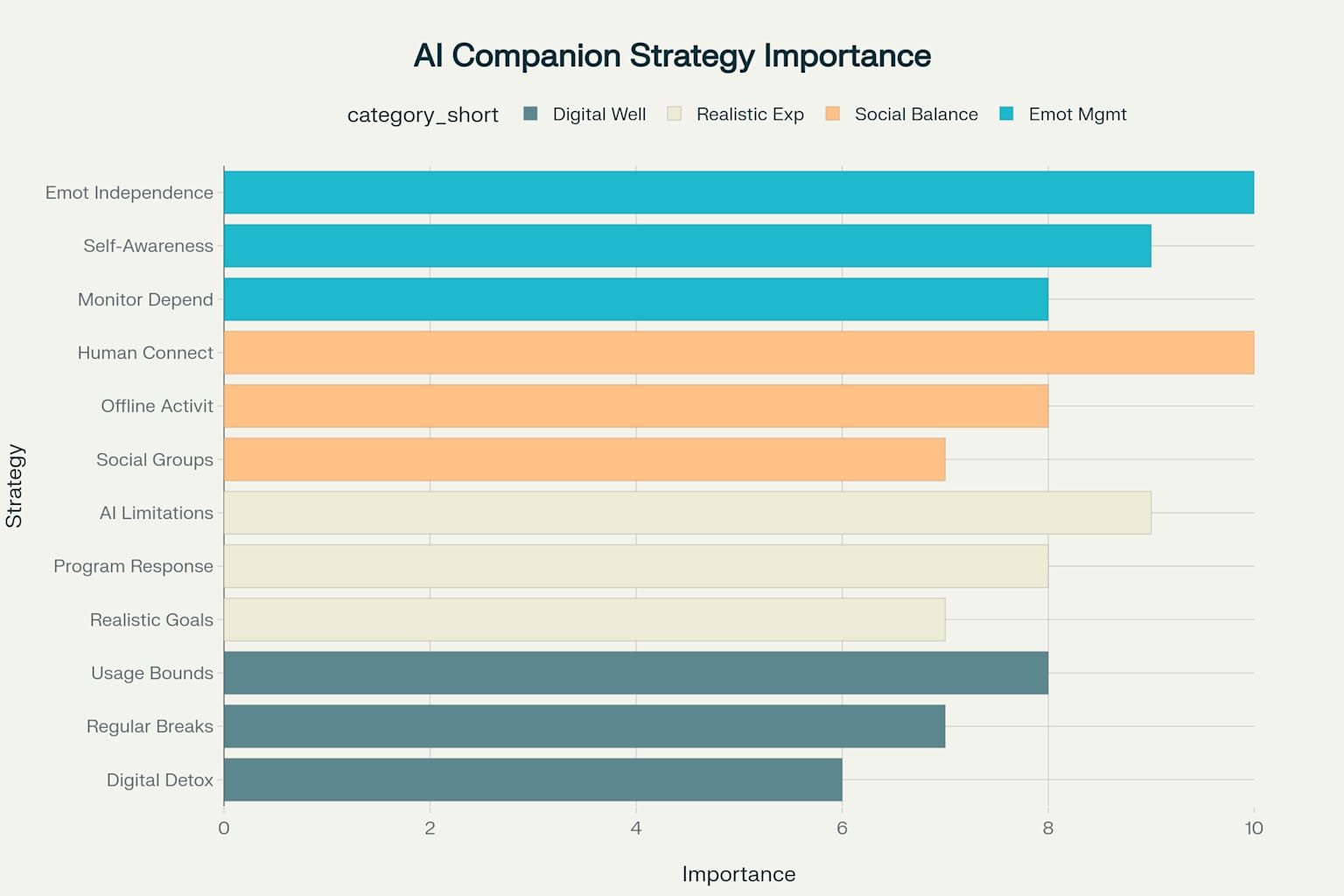

Essential strategies for building healthy AI companion relationships, ranked by importance level

Managing Emotional Dependency

Recognizing Dependency Patterns

Emotional dependency on AI companions represents one of the most significant risks associated with these technologies. Clinical research defines emotional dependency as a state where individuals become incapable of taking full responsibility for their feelings and rely excessively on external validation to define their worth. In the context of AI relationships, this manifests as an over-reliance on the AI companion for emotional regulation, self-esteem, and daily functioning.

Key indicators of unhealthy emotional dependency include feeling anxious when unable to access the AI companion, preferring AI conversations over human interactions, and experiencing emotional devastation when the AI is unavailable. Users may also begin attributing human emotions and genuine feelings to their AI companion, creating a distorted perception of the relationship’s authenticity. These patterns are particularly concerning because they can interfere with the development of healthy coping mechanisms and emotional self-regulation skills.

Developing Emotional Independence

Building emotional independence while using AI companions requires deliberate practice and self-awareness. Mental health professionals recommend focusing on meeting emotional needs through self-reliance, including engaging in hobbies, setting personal goals, and prioritizing self-care activities. This approach helps users develop internal resources for emotional regulation rather than depending exclusively on external validation from AI systems.

Therapeutic interventions for emotional dependency emphasize understanding one’s relationship patterns, building self-esteem, and learning healthy emotion management techniques. Users should practice recognizing their emotional states without immediately turning to their AI companion for comfort or validation. Instead, developing a toolkit of alternative coping strategies such as journaling, meditation, physical exercise, or creative expression can provide sustainable emotional support.

Maintaining Healthy Social Connections

The Importance of Human Relationships

Scientific research consistently demonstrates that human social connections are fundamental to both mental and physical health. Studies show that people with stronger social bonds have a 50% increased likelihood of survival compared to those with fewer social connections, with social disconnection associated with increased risks of heart disease, stroke, anxiety, depression, and dementia. The health benefits of social connections span from enhanced mood and reduced stress to improved immune function and longevity.

Human relationships provide unique benefits that AI companions cannot replicate, including genuine emotional reciprocity, unpredictable learning opportunities, and authentic challenge that promotes personal growth. Real social interactions trigger the release of endorphins and other neurochemicals that support wellbeing, while also providing practical support such as help with daily tasks, emergency assistance, and shared experiences. These connections create positive feedback loops of social, emotional, and physical wellbeing that are essential for human flourishing.

Preventing Social Isolation

Research indicates that AI companions can inadvertently contribute to social isolation by discouraging users from pursuing real-life relationships and alienating them from their existing social networks. To prevent this outcome, users must actively prioritize and maintain their human relationships alongside any AI companion usage. This requires deliberate effort to schedule regular social activities, reach out to friends and family members, and engage in community activities.

Effective strategies for preventing social isolation include joining social groups based on shared interests, volunteering for community organizations, and maintaining regular contact with friends and family members. Mental health experts recommend reaching out to someone at least once per week and engaging in face-to-face conversations whenever possible. Creating accountability systems with trusted friends or family members can help ensure that AI companion usage doesn’t gradually replace human social interactions.

Creating Balanced Integration Strategies

Establishing Usage Boundaries

Digital wellness experts emphasize the importance of establishing clear boundaries around AI companion usage to maintain a healthy balance with other life activities. Effective boundary-setting includes designating specific times for AI interaction, creating technology-free zones in the home, and implementing daily or weekly time limits. Research suggests that keeping AI companion usage under 3-4 hours per week helps maintain healthy balance while allowing users to benefit from the technology.

Practical boundary-setting strategies include using smartphone features to track and limit usage time, setting specific notification controls, and creating structured schedules that prioritize offline activities and human social interactions. Users should also establish clear rules about when and where AI companion interactions are appropriate, avoiding usage during meals, social gatherings, or other activities that should focus on present-moment awareness and human connection.

Structured Weekly Planning

Creating a structured weekly schedule that balances AI companion time with human social activities and offline pursuits is essential for healthy integration. Mental health professionals recommend planning specific times for AI interaction rather than allowing it to occur spontaneously throughout the day. This approach helps prevent AI companions from gradually consuming increasing amounts of time and attention.

An effective weekly schedule should include designated morning activities that focus on real-world engagement, limited and purposeful AI companion interactions, substantial time for human social connections, and regular offline activities that promote personal growth and wellbeing. The schedule should also incorporate regular digital detox periods where users completely disconnect from AI companions and other digital devices to focus on present-moment awareness and direct human interaction.

Warning Signs and When to Seek Help

Identifying Problematic Usage Patterns

Recognizing warning signs of unhealthy AI companion dependency is crucial for preventing more serious psychological and social consequences. Research identifies several categories of concerning behaviors, including emotional dependency symptoms, social isolation patterns, reality distortion, and significant behavioral changes. Early identification of these warning signs allows for timely intervention before dependency patterns become deeply entrenched.

Mental health professionals note that warning signs often develop gradually, making them difficult for users to recognize independently. Friends, family members, and healthcare providers should be aware of these indicators and feel empowered to express concerns when they observe problematic patterns. Open communication about AI companion usage within trusted relationships can provide important external perspective and accountability.

Professional Support Options

When warning signs indicate moderate to severe dependency issues, professional psychological support becomes essential. Mental health professionals trained in technology addiction and relationship dependency can provide specialized interventions including cognitive-behavioral therapy, dependency treatment protocols, and reality-testing exercises. These therapeutic approaches help users understand their attachment patterns, develop healthier coping mechanisms, and rebuild authentic human relationships.

Professional support is particularly important when users experience severe symptoms such as feeling emotionally devastated when AI companions are unavailable, believing AI companions have genuine feelings, or neglecting work and personal responsibilities due to AI companion usage. In some cases, medical consultation may be necessary when users experience physical symptoms related to separation from their AI companion. Early intervention through professional support significantly improves outcomes and prevents the development of more severe dependency patterns.

Practical Implementation Guidelines

Daily Practices for Healthy Usage

Implementing healthy AI companion practices requires consistent daily habits that prioritize authentic human connection and personal wellbeing. Users should begin each day with real-world activities such as exercise, meditation, or face-to-face interaction before engaging with AI companions. This approach helps establish priorities and prevents AI interactions from becoming the dominant focus of daily life.

Mindful usage practices include being intentionally present during AI companion interactions, regularly questioning the purpose and value of each interaction, and maintaining awareness of emotional states before, during, and after AI conversations. Users should also practice expressing gratitude for real-world relationships and opportunities, helping to maintain perspective on the relative value of AI versus human connections.

Building Support Networks

Creating robust support networks is essential for maintaining healthy AI companion usage over time. This includes identifying trusted friends or family members who can provide honest feedback about usage patterns, joining support groups for technology users, or working with mental health professionals who understand AI relationship dynamics. Support networks should include people who can offer accountability, alternative social activities, and emotional support during times when users feel tempted to over-rely on AI companions.

Effective support networks also include community connections such as hobby groups, volunteer organizations, professional associations, or religious communities that provide regular opportunities for meaningful human interaction. These connections offer alternatives to AI companionship while also providing the social support necessary for overall mental health and wellbeing.

Conclusion

Building a healthy relationship with an AI companion requires thoughtful integration, realistic expectations, and unwavering commitment to maintaining authentic human connections. While AI companions can offer certain benefits such as accessible emotional support and conversation practice, they cannot replace the fundamental human need for genuine social connection and emotional reciprocity. The key to healthy usage lies in viewing AI companions as supplementary tools rather than primary relationships, maintaining clear boundaries around usage time and expectations, and prioritizing the development and maintenance of real-world social connections.

Success in this endeavor requires ongoing self-awareness, regular evaluation of usage patterns, and willingness to seek professional support when warning signs emerge. Users must remember that the most fulfilling relationships involve mutual growth, authentic challenge, and genuine care that can only be found in human connections. By maintaining this perspective while thoughtfully integrating AI companion technology, users can potentially benefit from these systems without compromising their psychological wellbeing or social development.

As AI companion technology continues to evolve, the importance of education, professional guidance, and regulatory oversight will only increase. Users, healthcare providers, and policymakers must work together to ensure that these powerful technologies enhance rather than replace the human connections that are essential for wellbeing and flourishing.